Tronkostroglutegunkr

Registered Senior Member

Modern computer monitors only go up to 120FPS, but I want to test human time perception, so I'll need something far above that.

Modern computer monitors only go up to 120FPS, but I want to test human time perception, so I'll need something far above that.

120fps is already well above human perception.

That's talking about continuity of vision, which is about images overlapping. I'm talking about "What's the smallest visual increment a human can perceive."

I've tested that myself using Flash and video editors. If I run the frame rate at 100FPS, and place a single random frame of something completely different into a video, I can spot it (though there is some shredding of the image, due to it approaching the monitor refresh rate). This shows that an increment of 100th of a second is an understandable unit of time for the human brain. Note: So long as it is something normally/high contrasting. The refresh rate itself works, because bright images beat dark images in perception. There is a light/eye element here.

Since I can still see it with a cap of 100-120th of a second, I need to be able to see a much higher FPS display to test the limits. I don't actually think it's hard to achieve these high frame rates, since it's digital, but it seems like it's pointless for general use, so they don't make them as far as I can tell.

That's informative, thanks.

However, if the LED is displaying a dim color, and the different color is a bright color, you WILL see the flicker. The human eye has no lower limit to flicker detection assuming the flicker is sufficent brightened to compensate for the shortness of the flicker.I predict that you won't be able to detect the green flash, but I also predict that as long as they aren't consecutive, as you increase the number of green flashes, that the light from the LED will appear increasingly yellow.

Recently, vision researchers have definitively proven this incorrect.120fps is already well above human perception.

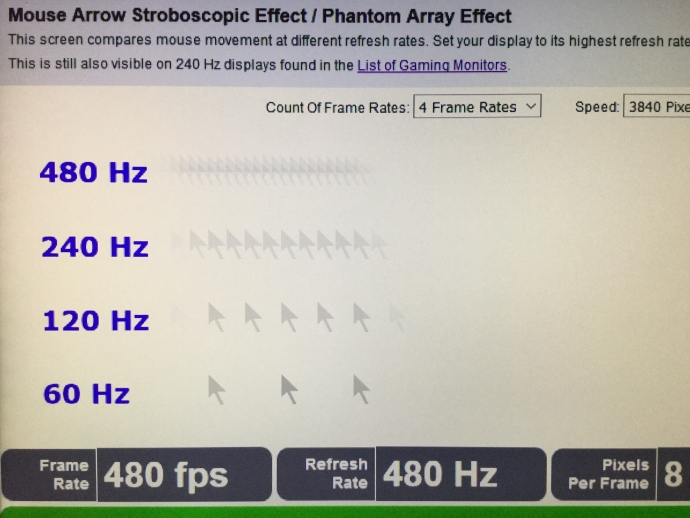

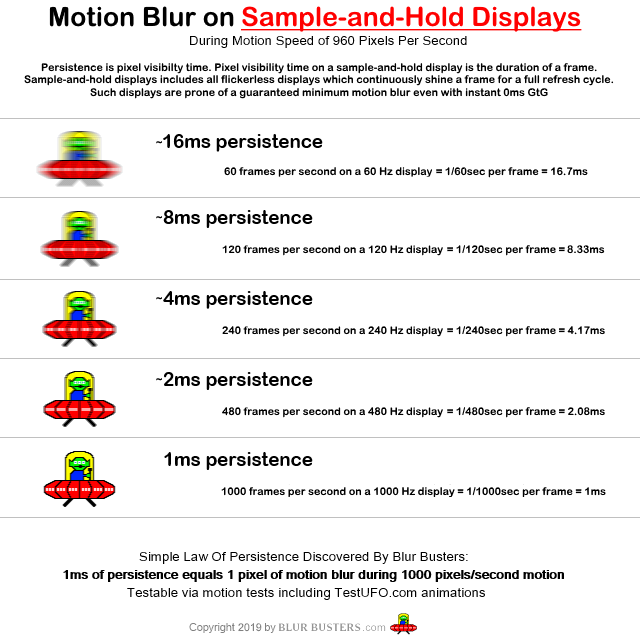

Finite-framerate displays have the problem of having either sample-and-hold or stroboscopic/wagonwheel effects (or both). Eye-tracking based motion blur results from sample-and-hold, hold-time, persistence. Lots of scientific papers cover this already (Search science paper sites for "sample-and-hold" or "hold-type" displays). Mathematically, 1ms of persistence equals 1 pixel of motion blur during 1000 pixels/sec motion. A 1000fps@1000Hz flicker-free display would simultaneously eliminate a lot of stroboscopic effects (wagonwheel artifacts) AND simultaneously eliminate motion blur AND without using flicker. This is great for Holodeck situations (e.g. VR goggles). And you wouldn't need to add artificially-generated motion blur. You'd just finally let the human brain add its own natural motion blur, with no motion blur artificially forced upon you by the graphics or by the display. So, 1000fps@1000Hz would be far closer to reality, while eliminating the stroboscopic/wagonwheel artifact problem.

There is a TestUFO animation (eye tracking motion blur) that is an excellent demo of "pick-your-poison" problem of finite-refresh displays. The problem is very clearly visible to the human eye even when viewing on a 120Hz gaming LCD or a 200Hz scientific CRT.

-- Animation has motion blur when viewing on LCD's

-- Animation has stroboscopic effect when viewing on CRT's

To simultaneously fix both at the same time (important for VR / Holodeck situations), you need to make the refresh rate resemble something infinite. That is not possible. However, a 1000fps@1000Hz display would sufficiently reduce/eliminate both stroboscopic effect / motion blur. Even the Oculus people said this; and the big names in game industry (Michael Abrash of Valve Software, John Carmack of id software) has already confirmed the benefits of ultrashort persistence flicker-free displays like this.

Did you know AMOLED generally has more motion blur than a 120Hz+ gaming LCD?